Interview with Anton Kozhevnikov, Co-Winner of the Gordon Bell Prize Honorable Mention for Special Achievements in Scalability

Anton Kozhevnikov is one of the co-winner of the Gordon Bell Prize Honorable Mention for Special Achievements in Scalability at last Supercomputing Conference SC10 in New Orleans (see our previous posting). Anton is a post doc at the Institute for Theoretical Physics at ETH Zurich and is working for the Computational Physics group under the supervision of Prof. Thomas C. Schulthess (ETH Zurich and CSCS).

We asked Anton about his work and the challenges he had.

Q: What is the relevance for physics of your research work?

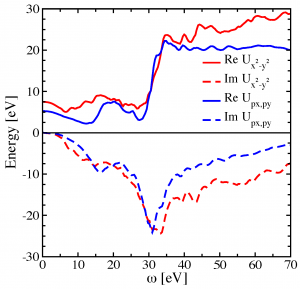

We are working on first principle parametrization of a Hubbard model. Why do we need this? Hubbard model is very important because it captures the essential properties of strongly correlated systems (for example, Hubbard model can describe a high-temperature superconducting transition). But like any model it has a set of freely adjustable parameters which impact the resulting solution. By doing parametrization of a model from first principles (i.e. calculating material-specific parameters of a model knowing only crystal structure of a given compound) we eliminate the uncertainty in the choice of parameters of the Hubbard model.

I should mention that the underlying theory for the first principle Hubbard model parametrization is well known and accepted in solid state community. In this work we have demonstrated that this type of calculations can be done in a very fast and efficient way.

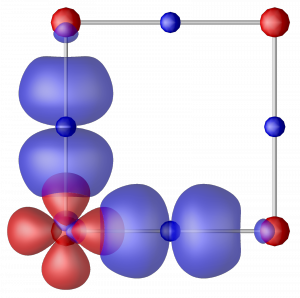

Next picture shows the isosurface (transparent red and blue) of the basis functions for the 3-band Hubbard model for prototype high Tc superconductor La2CuO4. Red balls represent copper atoms and blue balls – oxygens. This classical CuO2 plane is responsible for superconductivity in cuprates.

Q: What sustained performance did you reach?

We reached 1.5 petaflops on 221’440 computational cores of Jaguar XT5.

Q: Your received the prize for “special achievments in scalability”. What is so special about your work?

We demonstrated very strong scaling of the code. In all our runs time to solution was very close to ideal scaling ~1/N, where N is the number of cores.

Q: What have been the challenges to get such a high performance?

The DRC (density-reposne code) was redesigned considerably to take advantage of Cray’s linear algebra library (LibSci) and of Cray’s fast interconnect. The main challenge was to finish project in time and end up with fast and reliable code.

Q: How has the work been coordinated between the different partners? (ETH Zurich, ORNL, Tennesse)

The work on response code started two years ago when I was a postdoc at University of Tennessee with professor Adolfo Eguiluz being my adviser. At that time we cared more about background physics and not about performance. Later I joined the group of professor Thomas Schulthess at ETH Zürich. The development of response code continued here in Switzerland but now we payed more attention to optimization and speed. Thomas Schulthess undertook the general development strategy and coordination of scientific research, Adolfo Eguiluz was on the physics side of our work an I was doing coding, optimization, test and production runs.